One of those PowerPivot features that is no very well known is that PowerPivot can be used as a database that can serve many other tools besides Excel.

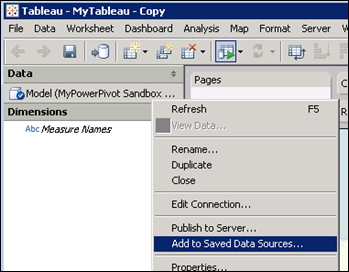

I blogged about it a little bit here when I mentioned that PowerPivot can be consumed through other tools like Tableau. However, I think is worth expanding on the topic a bit as this feature can be very useful for people migrating from “Personal BI” to “Team BI” or even “Corporate BI”.

Imagine a scenario in which you have developed a PowerPivot workbook and have then published it to a PowerPivot Gallery in SharePoint. Your report has now proven to be a success and its popularity has been rising recently across your company. A business unit manager now wants to distribute this report to his team members, but filtering it to only the data that pertains to each of them. The PowerPivot workbook has a big amount of data, demanding the 64-bit version on PowerPivot. The manager as well as his team members cannot download the workbook and use it locally as they only have PowerPivot 32-bit version installed and due to corporate policies they do not expect to be upgraded soon.

One option is for the manager to manually copy and paste the pivot table displayed on the browser into a separate excel file. He would have to do this, every day, for each team member as he can only sent the portion of the data that belongs to that specific employee. Not a great solution.

The other option would be to create a separate PowerPivot workbook for each of the team members with only his data. Not a great solution either as the data model and DAX calculations would have to be replicated, and if you need to change one of them one day, you would have to remember to change it in each of the replicas.

What to do? I want to point out that you can read a PowerPivot that has been published to SharePoint from a great number of other tools besides Excel. Some of these tools can enhance the PowerPivot implementation, giving us features to solve the scenario in question.

For this example, let’s stick with Microsoft reporting tools. Besides Excel itself, you can read PowerPivot data from Report Builder, SQL Server Reporting Services and PerformancePoint. All of them have the capability to read from published PowerPivots. SQL Server Reporting Services (SSRS), for example, will give you additional capabilities that will allow you to leverage your PowerPivot workbook but offer more advanced reporting layouts and delivery mechanisms, this last feature being what we need for our example.

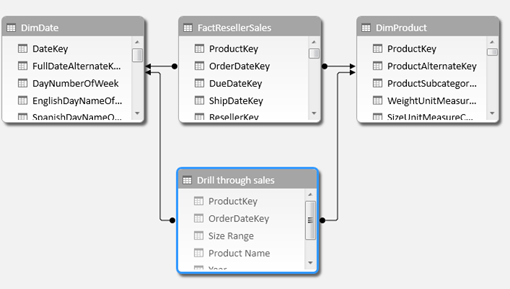

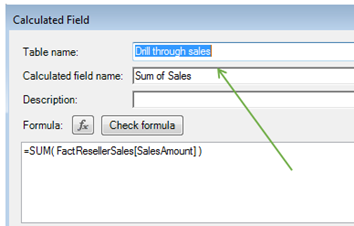

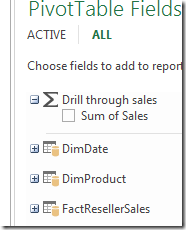

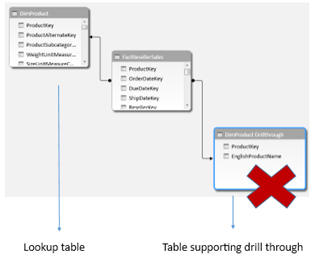

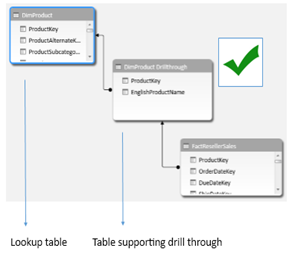

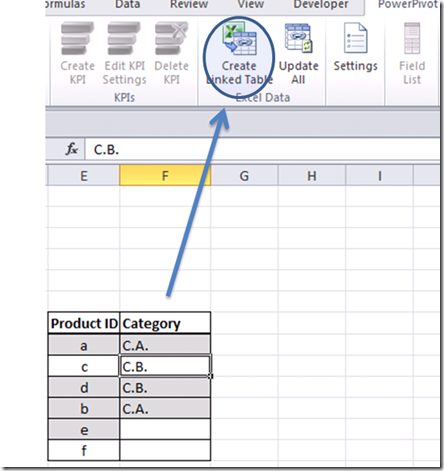

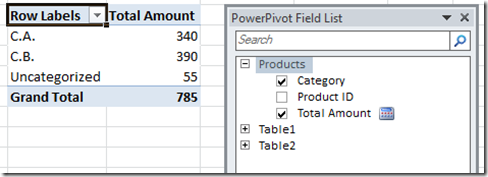

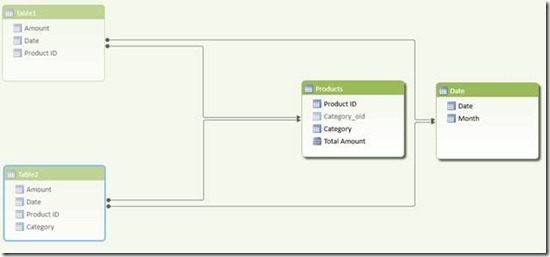

When you read PowerPivot from another tool, you are not reading the pivot table in the workbook but the entire data model available in the PowerPivot window. This is true even for Excel itself, being a client to the PowerPivot database.

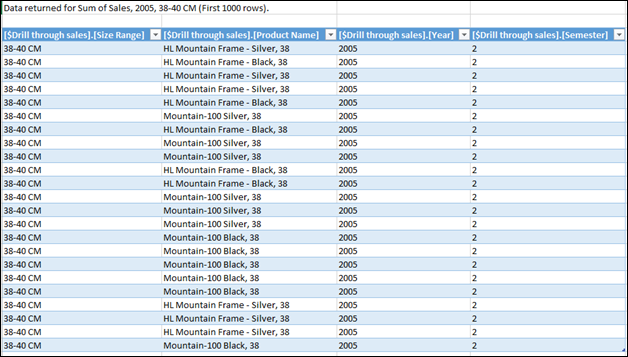

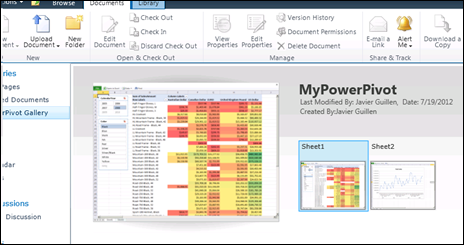

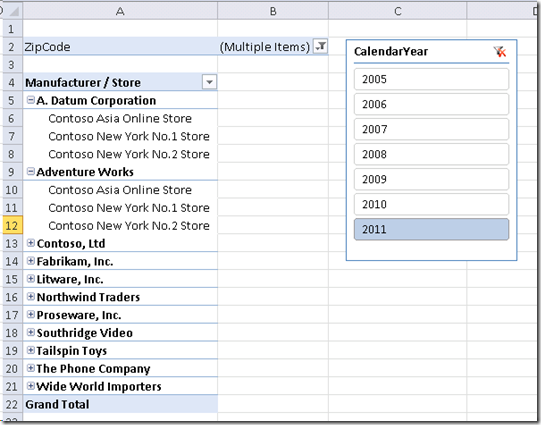

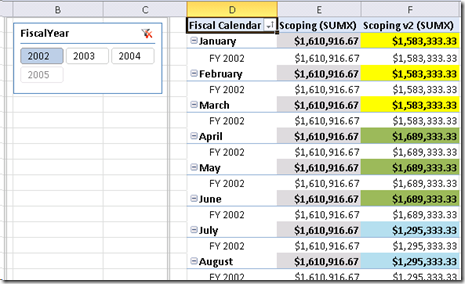

Lets continue with the scenario given. This is how my PowerPivot looks like in SharePoint, once I have published it:

So far so good. This is what most of the business users want to see and interact with.

But other than the nice Silverlight interface, something else happens when the PowerPivot was published and someone interacted with it (via slicers or filters) or by scheduling an automatic data refresh: a real database is generated in the form of a SQL Server Analysis Services BISM Tabular model. You can verify this by opening up the dedicated SQL Server Analysis Services instance which the PowerPivot add-in for SharePoint uses (this is a requirement for the add-in to work). In the case of my published report, I see the following through SQL Server Management Studio (SSMS):

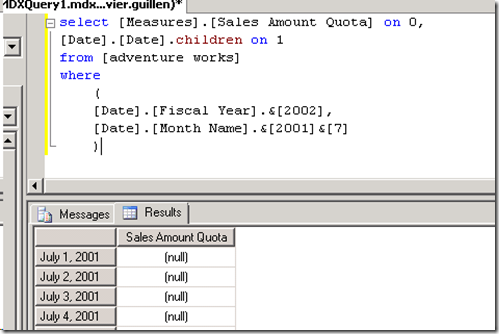

The highlighted database is quite literally my published workbook, in database form. If you happen to know some MDX you can then open a new query window and query the PowerPivot database (called a “model”) using this language. Note that you can be querying, in MDX, a measure that was developed in DAX – like in the case below. Additionally, you can create your own MDX calculated members as part of the query if necessary.

if you feel more familiar with DAX, though, you can query the PowerPivot model with it instead of MDX:

The point here is that PowerPivot is now stripped out of its Excel dependency and can be now queried from by many other tools (When SharePoint renders the workbook on the browser, it uses this SQL Server Analysis Services model with an Excel Services front end; however the Excel desktop application is not needed anymore).

Going back to our scenario, we will be building a solution in SQL Server Reporting Services that will take a parameter and deliver a scheduled report based on filtered data coming from the PowerPivot model.

If you are not familiar with SQL Server Reporting Services (SSRS), I would recommend you start with Report Builder 3.0 which is a more data analyst centric version of the full blown SSRS product, yet it leverages the same underlying technology.

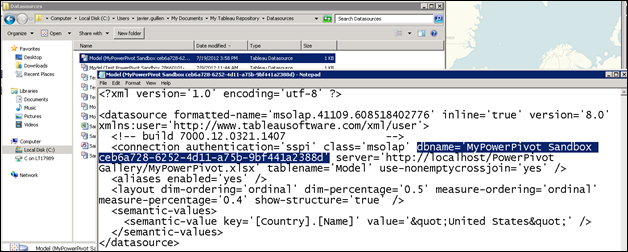

When connecting SSRS to PowerPivot, remember to always do it through the URL that hosts the workbook in SharePoint. This is a very important point, as when you decide to enhance your PowerPivot workbook and republish it, a new cache copy will be created in SQL Server Analysis Services. By using the URL instead of a direct connection to the model, you will be certain to always consume the ‘latest & greatest’ version of the workbook.

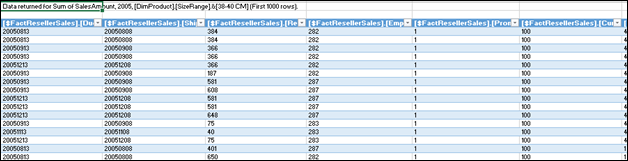

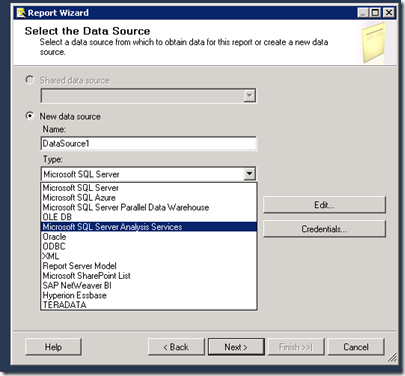

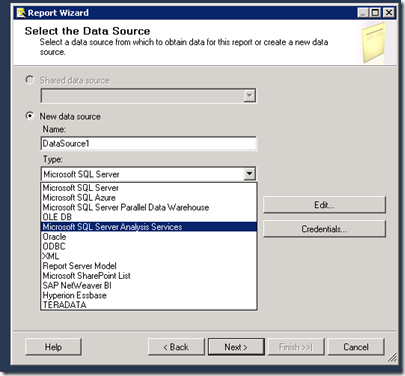

In Visual Studio 2010, you can create a new SSRS data source by selecting the Analysis Services provider:

Click on Edit and use the SharePoint location of the PowerPivot workbook to select the Model:

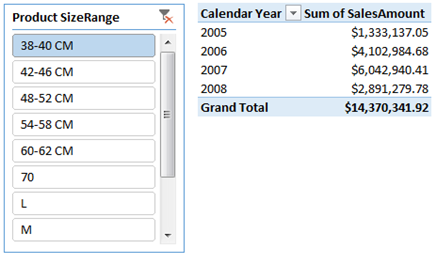

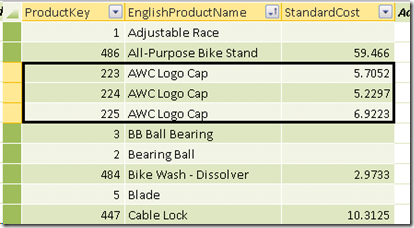

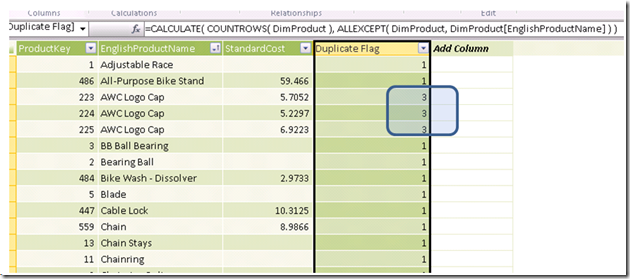

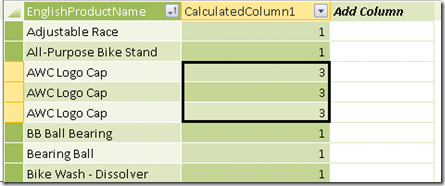

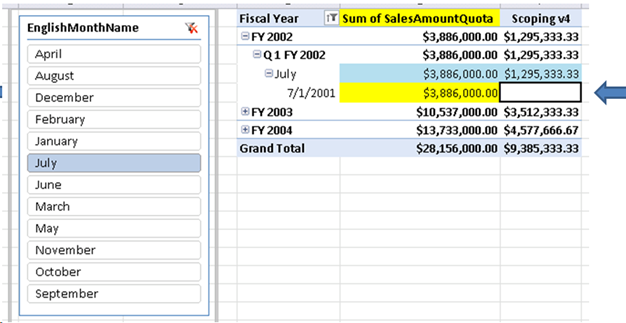

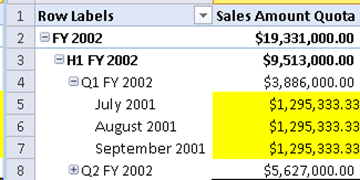

With the connection to PowerPivot now created, you can use the MDX query editor to drag and drop the elements needed for the report. In this case, I have created a parameter based on employee email, as I will be using this for generating a filtered version of the report for each employee I am interested on:

Below is how the final PowerPivot-connected SSRS report looks like. The currency amounts shown are associated only to the employee in question (filtered by his email account, selected with the dropdown at the top of the report):

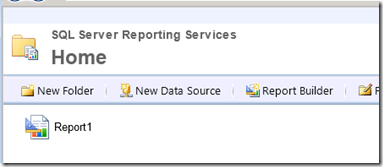

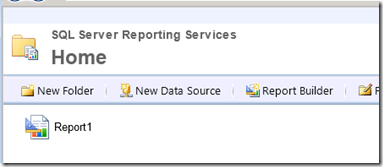

We can now deploy the report to the report server:

In order to schedule an automated run that goes a number of email values and delivers the report with filtered data we must configure an SSRS snapshot. Notice that by doing so we are now using capabilities beyond those of a pure PowerPivot implementation.

There are many websites that explain the process of generating an SSRS subscription so I won’t go into a lot detail here, but I was want to show how the process would look like in general terms.

What we want to do at this point is select the report and click on ‘Manage’. That will give you access to a set of features built into the SSRS framework.

First, select ‘data sources’. On it, be sure to specify the account that will be used to query the PowerPivot model:

After that, click on ‘Subscriptions’ and then on ‘New Data Driven Subscription’. Following that, give your new subscription a name and specify a data source that contains recipient information. This data source does *not* need to be in PowerPivot, it can be a table in a SQL Server database that specifies the information about where and how to deliver the automatic snapshot.

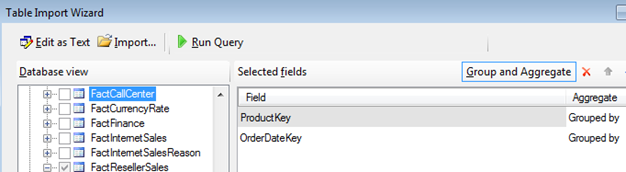

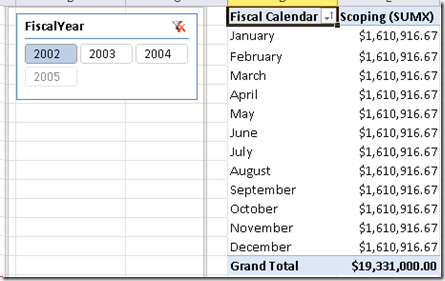

In my case, I decided for the creation of a file on a network share instead of email delivery. As such, the query specified next in the wizard should contain that information, as well as specifying how the report parameter (employee email address) should be set on each iteration of the snapshot:

Here is how my SSRS_Subscription table looks like:

In other words, I want to create two files: One called ‘JavierSnapshot’ which was the report filtered for the email address amy0@adventure-works.com and another one called ‘LeeSnapshot’ which should filter the report for garrett1@adventure-works.com. These are just examples, in your case you may want to use specific output files mapping, for example, sales manager with sales territories.

The SSRS subscription wizard now instructs us to map the output of the table with the chosen delivery attributes (file delivery in this case), as well as mapping output columns with report parameters:

At the end, the wizard asks you for the schedule on which you want this process to execute.

Here is what the output looks like, in my case, after the subscription has automatically executed:

In summary, each PDF was generated by the SSRS report querying the PowerPivot model, but only filtering for a specific employee and automatically delivering the output to a folder in the network. Pretty useful functionality that can enhance the options available to your self-service environment.