Denormalizing tables in Power Query

January 5, 2014 5 Comments

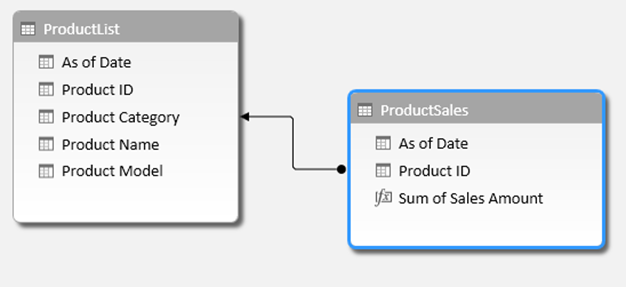

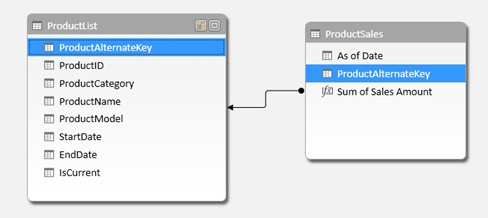

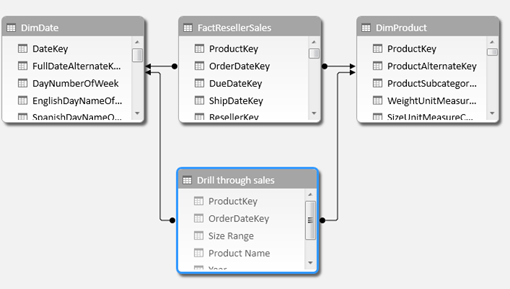

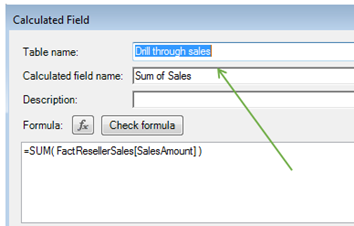

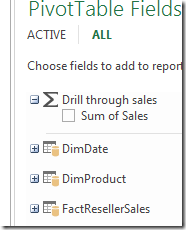

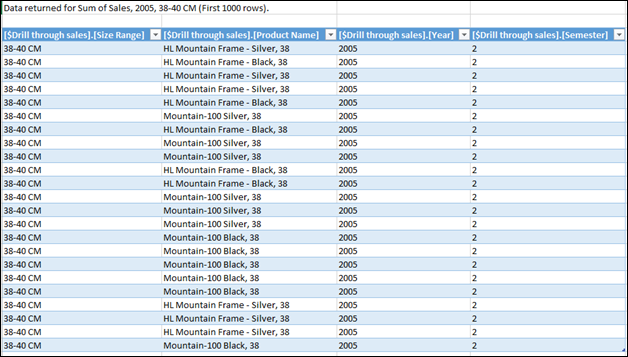

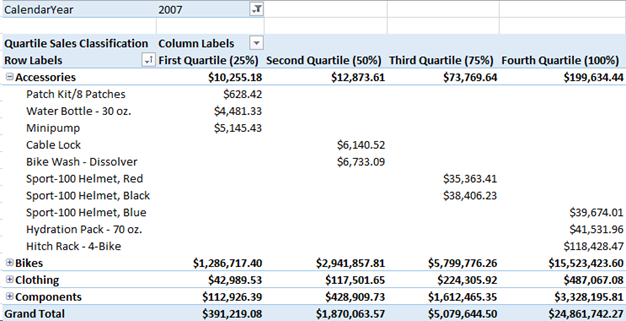

Given a great number of Power Query data load and transform scripts will end up populating an Excel Data Model, it is important to keep in mind that a dimensional approach is preferred when shaping data. Clearly one of the steps we want to follow, whenever possible, is that of denormalizing tables to more properly reflect logical entities.

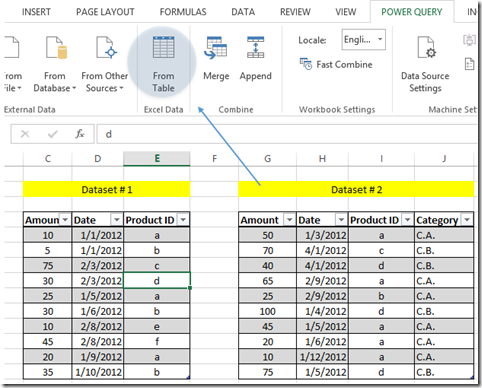

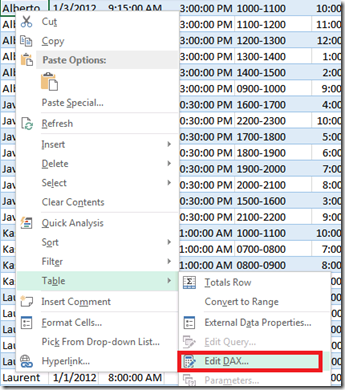

Using the Power Query UI, it is easy enough to load tables and merge them. Unfortunately, relying solely on the UI can generate a rather inefficient process involving multiple M scripts.

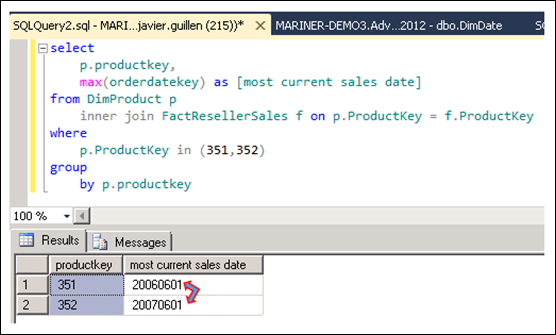

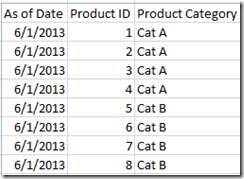

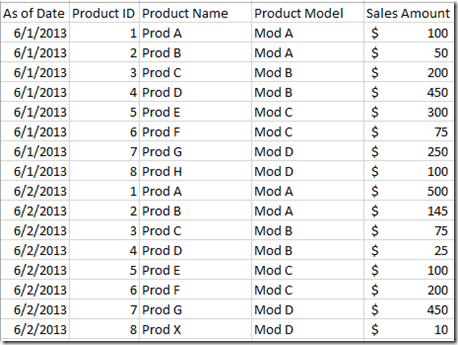

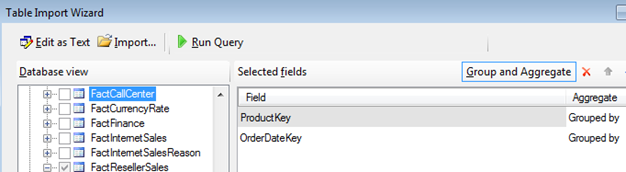

Let’s say for example that we want to combine Adventure Works tables DimProduct, DimProductSubcategory and DimProductCategory to create a consolidated Product table containing a few product attributes as well as the subcategory and category names.

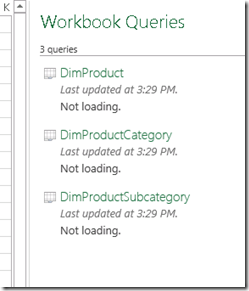

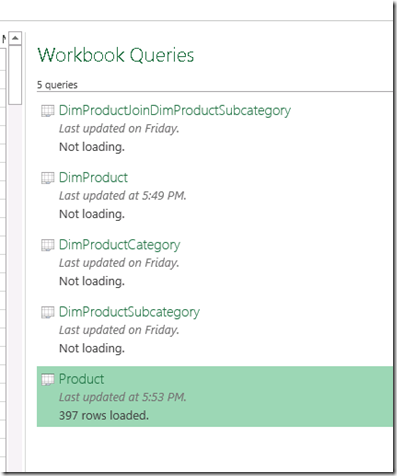

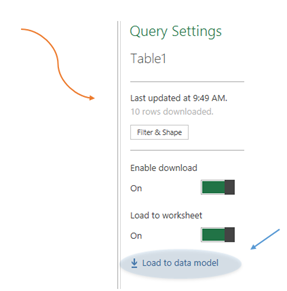

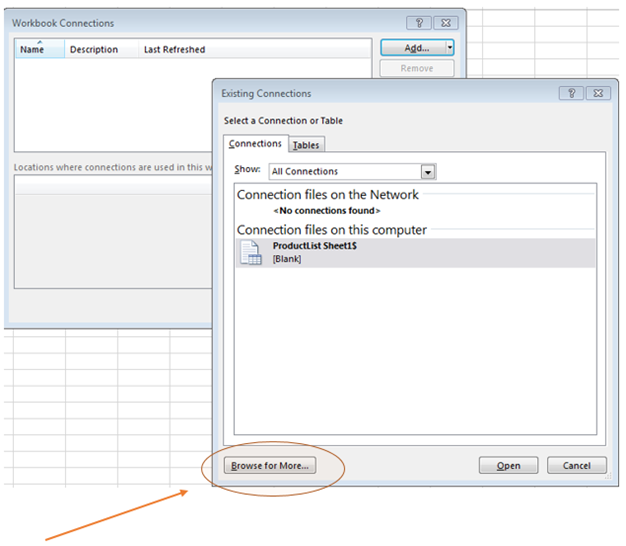

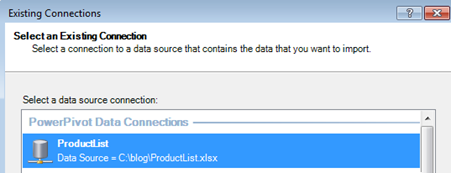

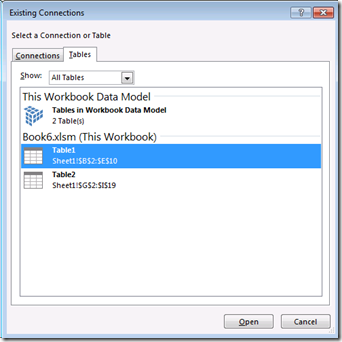

If you start querying table by table, you end up with three workbook queries. As we are not yet ready for loading into the Data Model, I have unselected that option.

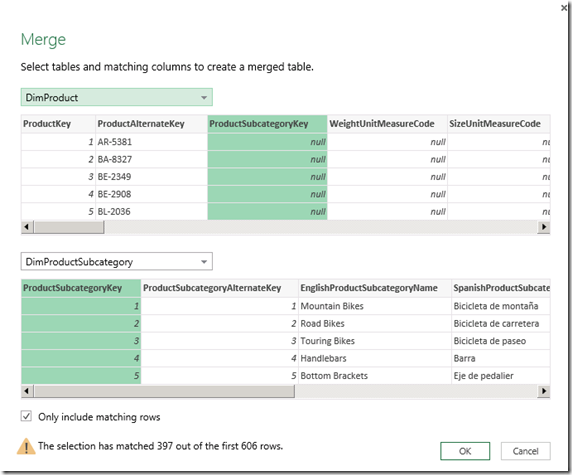

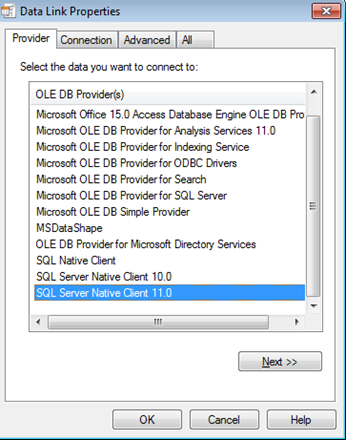

We still need two additional scripts to denormalize. One will consolidate DimProduct into DimProductSubcategory (we can call the ouptut DimProductJoinDimProductSubcategory). By selecting the Merge option in the toolbar, we are presented with a window that allows us to merge two resultsets by specifying a matching column. In this case, we start by merging DimProduct with DimProductSubcategory via the ProductSubcategoryKey.

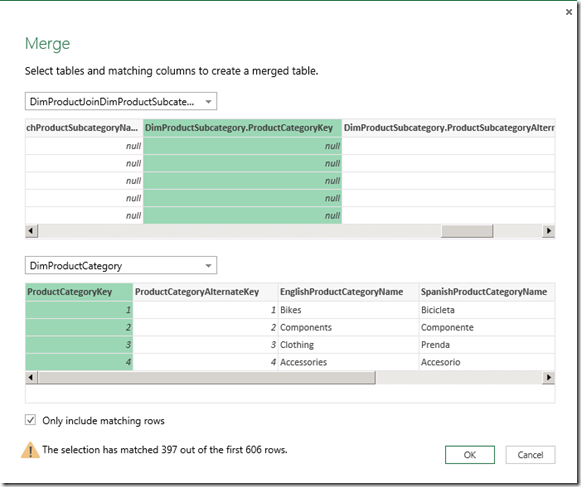

Unfortunately, as this window only allows us to merge two datasets at once, we have to walk one more step in order to merge DimProductJoinDimProductSubcategory with DimProductCategory and get all the necessary data we want in one table.

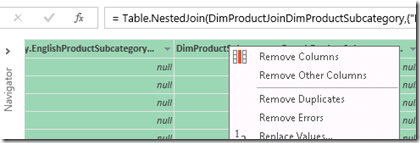

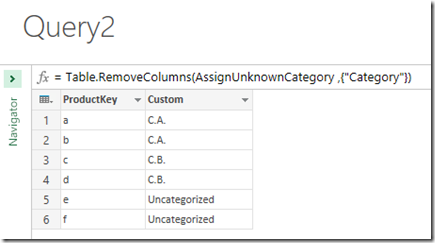

As the result of this process will contain columns from all tables, we must remove the columns we don’t need.

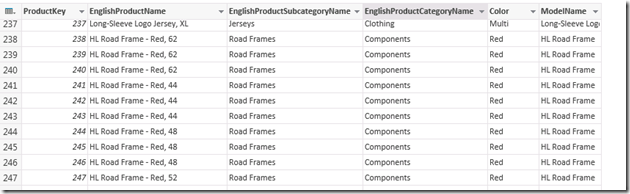

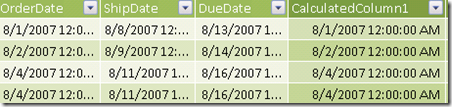

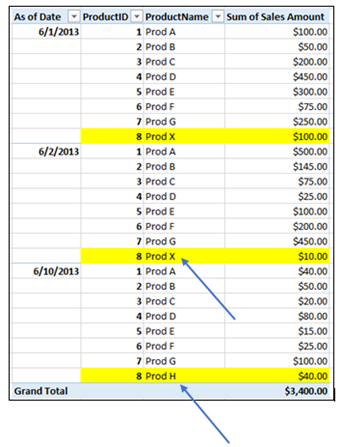

Finally we end up with the table we were looking for: “Product”:

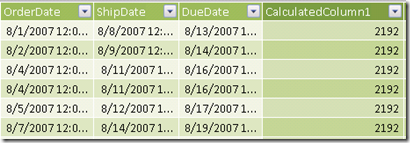

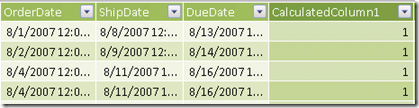

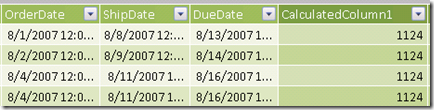

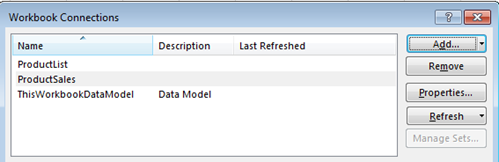

Though it was fairly easy to do, its a relatively inefficient process that requires the refreshing of 4 intermediary scripts that add little to no value as independent queries:

Instead, we are better off generating the denormalizing effect as a single M script, which actually runs more efficiently as:

- requires one query refresh instead of five.

- selects only the required columns (improving efficiency), instead of selecting all columns and then removing the unwanted ones.

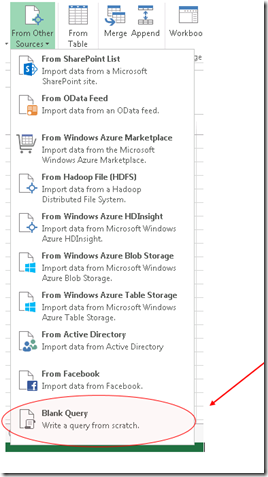

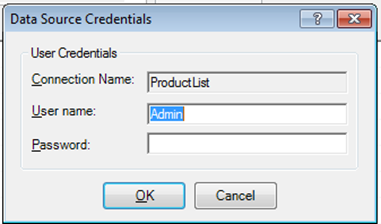

To do so, we can start instead with a blank query,

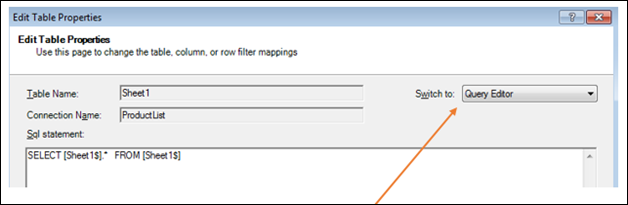

and on it, replicate the steps followed before but this time using systematic, step by step approach that consolidates all steps and does not require maintenance of separate scripts:

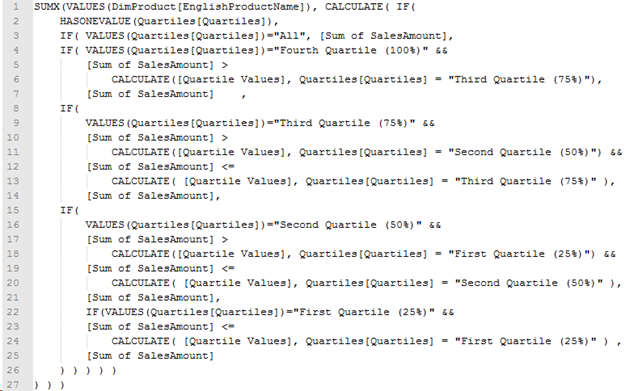

let

Source = Sql.Database("localhost", "AdventureWorksDW2012"),

DimProduct = Source{[Name="DimProduct"]}[Data],

DimProduct.Result = Table.SelectColumns (DimProduct,

{"ProductKey",

"EnglishProductName",

"Color",

"ModelName",

"ProductSubcategoryKey" } ),DimProductSubcategory = Source{[Name="DimProductSubcategory"]}[Data],

DimProductSubcategory.Result = Table.SelectColumns ( DimProductSubcategory,

{"EnglishProductSubcategoryName",

"ProductSubcategoryKey",

"ProductCategoryKey"}),DimProductCategory = Source{[Name="DimProductCategory"]}[Data],

DimProductCategory.Result = Table.SelectColumns ( DimProductCategory,

{"EnglishProductCategoryName",

"ProductCategoryKey"}),/* Join DimProduct to DimProductSubcategory */

DimProductJoinDimProductSubcategory = Table.Join(DimProduct.Result,

"ProductSubcategoryKey", DimProductSubcategory.Result , "ProductSubcategoryKey" ) ,/* Join Product and Subcategories to Categories */

Product = Table.Join( DimProductJoinDimProductSubcategory, "ProductCategoryKey",

DimProductCategory.Result, "ProductCategoryKey" ),/* Select Final Result */

Product.Result = Table.SelectColumns (Product, {

"ProductKey",

"EnglishProductName",

"EnglishProductSubcategoryName",

"EnglishProductCategoryName",

"Color",

"ModelName" } )in

Product.Result

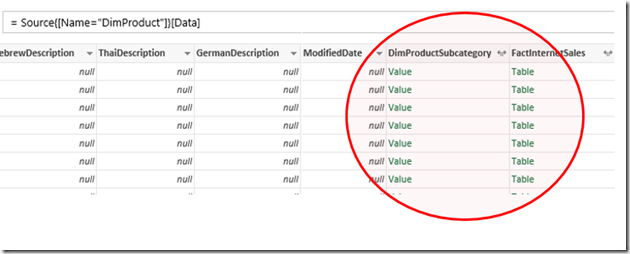

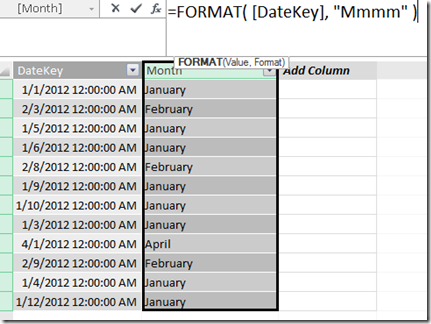

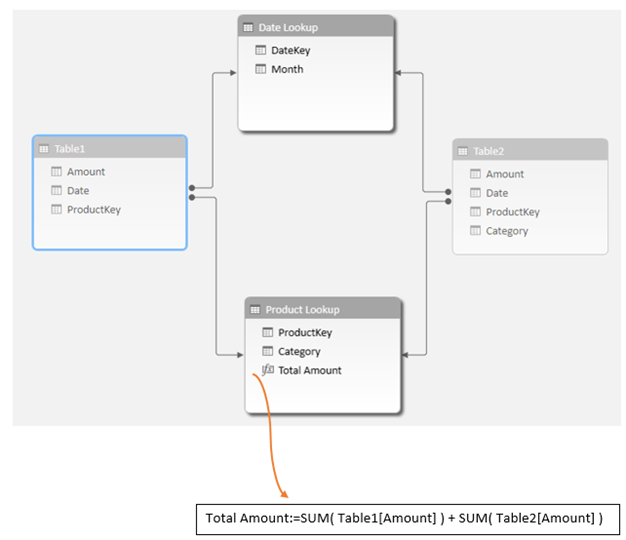

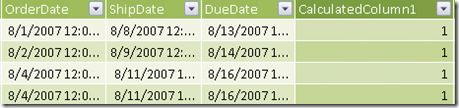

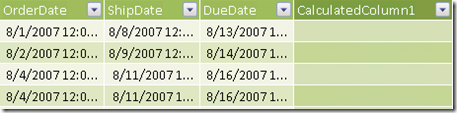

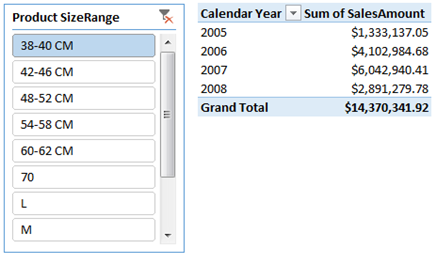

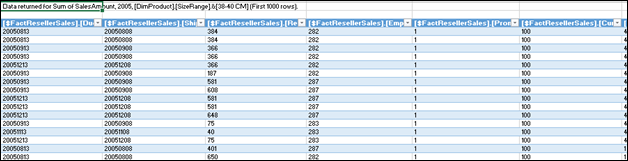

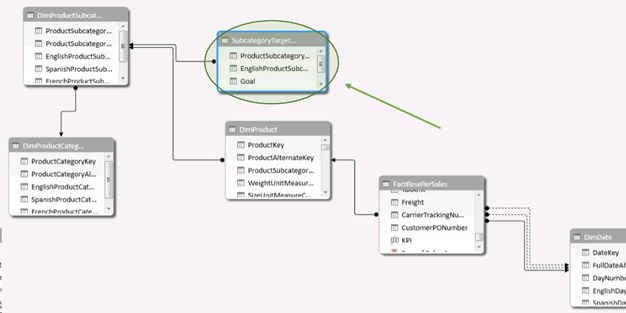

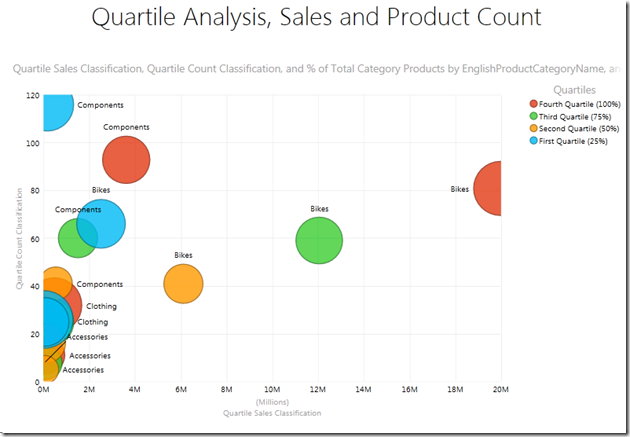

The result is highly efficient and more easily maintained. Another way in which this process can be done, if there are existing relationships among tables, is by the process of ‘expanding columns’. These are columns that are automatically loaded and represent a link to another table that has a relationship with the table we are loading. You can see them in the UI as:

Following a similar approach to the one outlined before, we can device a single M script to do all the work. This time, we will expand the columns without the need to explicitly join tables, achieving the same denormalized result:

let

Source = Sql.Database("localhost", "AdventureWorksDW2012"),/* load DimProduct */

qryProd = Source{[Name="DimProduct"]}[Data],/* retrieve Subcategory Name */

qrySubCat = Table.ExpandRecordColumn(

qryProd,

"DimProductSubcategory",

{"EnglishProductSubcategoryName", "DimProductCategory" }),/* retrieve Category Name */

qryCat = Table.ExpandRecordColumn(

qrySubCat,

"DimProductCategory",

{"EnglishProductCategoryName"}),/* select columns of interest */

Product = Table.SelectColumns (qryCat , {

"ProductKey",

"EnglishProductName",

"EnglishProductSubcategoryName",

"EnglishProductCategoryName",

"Color",

"ModelName" } )in

Product

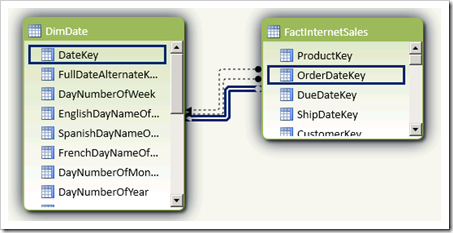

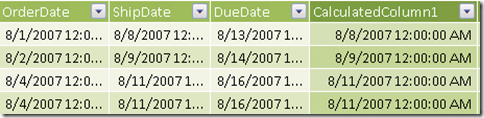

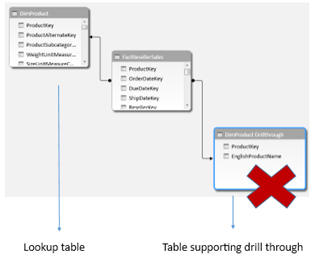

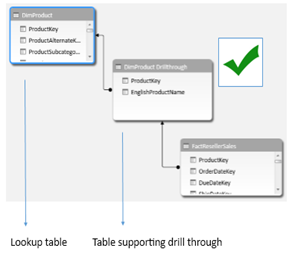

The Table.ExpandRecordColumn function allows you to navigate pre-established relationships. Notice the first parameter is the table we are working with and the second parameter is the column we will be expanding. If we are in the ‘many’ side of the relationship, we will see a ‘Value’ string which indicates there is a single value to be returned from the lookup table. Otherwise, we will see a ‘Table’ string.

Finally, notice that when expanding Subcategory values we not only retrieve the EnglishProductSubcategoryName (a column we want in the final resulset), but also we are retrieving a column called ‘DimProductCategory’. This is a column with a reference to the DimProductCategory table, which is required to further expand the resultset, allowing us to jump one more step and retrieve the EnglishProductCategoryName.